Malcore Team are conducting speed tests of our Sandbox against industry. We are doing this as a key capability of our source code is its speed which gives its ability to scale.

We invite all industry to run more tests to speed up their products. Every day we look to tighten our code and speed up Malcore!

Speed Results

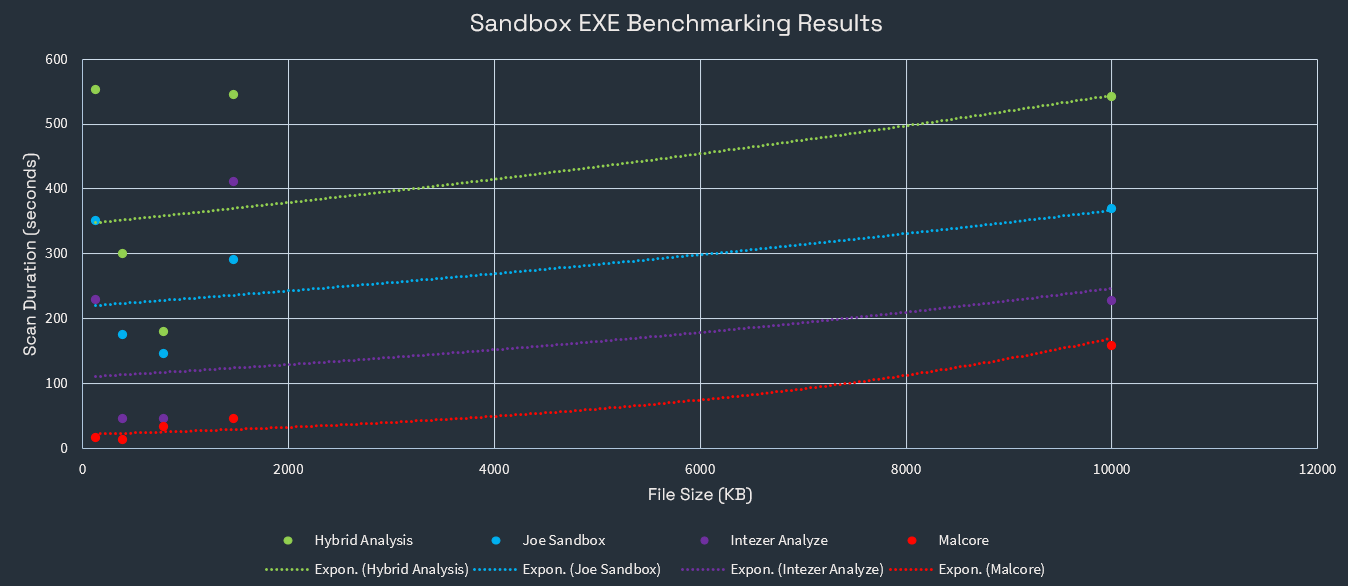

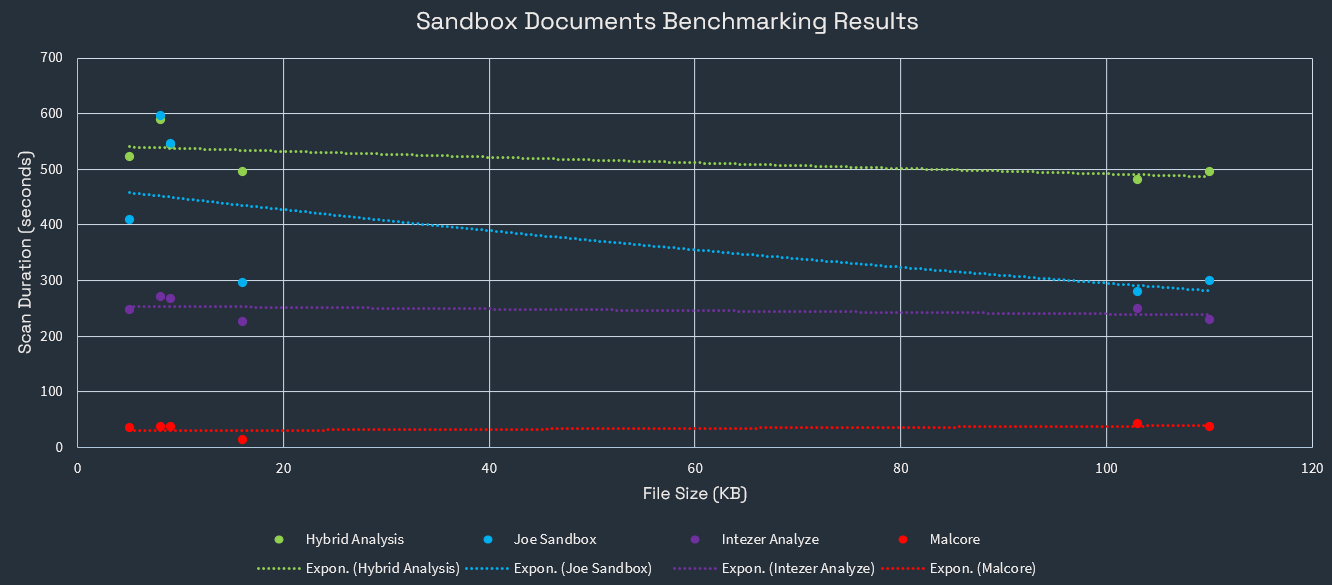

These results were broken down into three categories: Executables, Documents, and APK’s. On every file tested, Malcore was the fastest and provided the same or more information on analysis. Malcore was the fastest and most accurate sandbox environment tested, being up to 19x faster than the competition.

Malcore had the fastest recorded times for every test. On average, Malcore was 9.5 times faster than the competition in executables speed testing.

Malcore was also recorded as having the fastest times when it came to document analysis. On average, Malcore was 12.5 times faster than the competition. All these scans are done by our partner InQuest Labs.

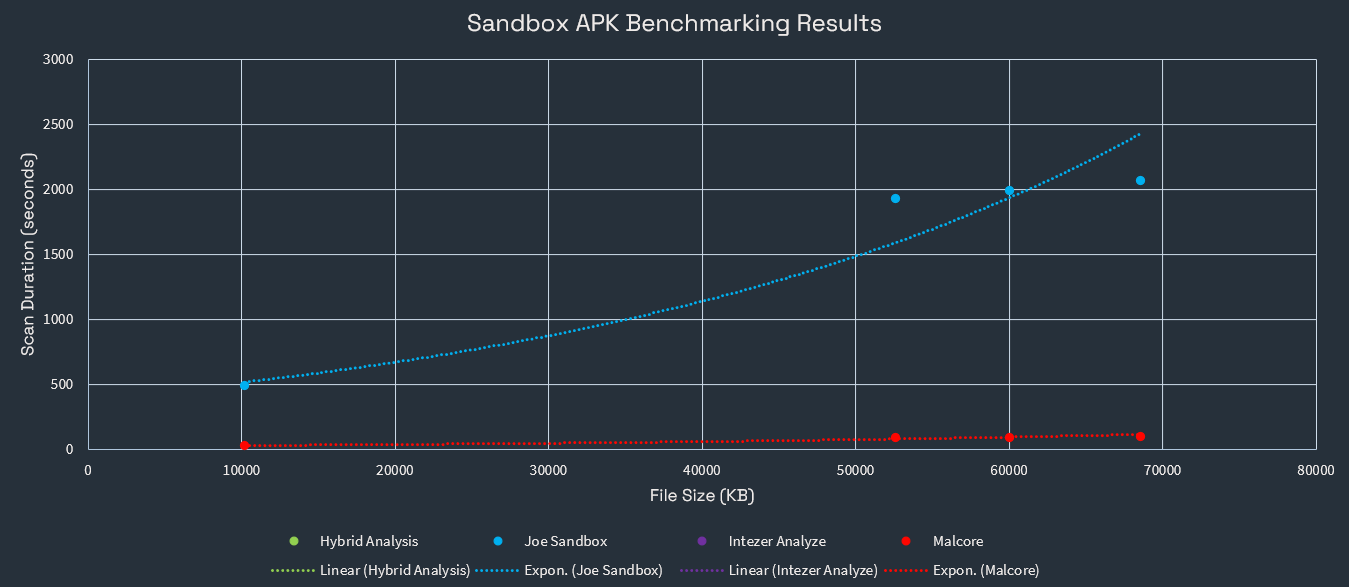

With the other sandbox environments not being capable of dynamic (sandbox) analysis of APK files, only Joe Sandbox and Malcore were able to produce valid results.

Malcore tested more than 19 times faster than Joe Sandbox in analysis times, with consistent result timing throughout the tests.

Methodology

These tests were conducted through an automated script that monitored sandbox status changes for each platform in order to remove human error from external timing. Accounting for the differing processes between platforms, the general process involved a timer starting when a prompt was presented (i.e., the “status” was set to “running”), and stopped when that was no longer found. Where available, timestamped output was also used to confirm results.

Summary of Results

Evident in the graphs above, Malcore was (on average) 13.75 times faster than competing sandbox environments across all file types tested. Of note, Malcore was 19.30 times faster than it’s competitors in APK file types.

Malcore’s results also proved to be most consistently accurate, with other platforms missing (for example) files containing canaries. Most notable was “Canary.pdf”, which contained a simple canary designed to alert an email address when opened in Adobe Acrobat (generated via canarytokens[.]org/generate).

Limitations / Potential Issues

Due to the lack of timestamps on report outcomes, the method of creating a script to automatically time website element changes was introduced (as mentioned above). With this, however, there are several key potential issues and limitations:

The timings are based on length of time the results took to present to the user on the screen.

Actual time spent in the sandbox environment may differ.

All tests were conducted from the same geographical location.

This could mean that natural ping delays could affect the timing results.

Queues and Priority ordering could allow for some scans to be run before others.

Scan times are dependent on the load of the server on that period (differs between days and times).

While all scans were attempted to be run as close together as possible, this was delayed by certain platforms restricting additional scans within a time period.

Differing implementations / variable execution time limits.

Each platform had different implementations of how the limit the execution time to evaluate the file.

Analysis Summary

The benchmarking tests looked at Hybrid Analysis, Joe Security, Intezer Analyse, and Malcore (VMRay didn’t respond / allow access, and Any.Run’s testing procedure differed from others – causing time trials to be irrelevant). These tests included .docx, .pdf, .xlsx, .exe, and .apk files – since these were the most commonly available file types that could be tested on free plans for the sandbox environments listed.

To summarise potential issues, the time calculations may be subject to internet connection and are calculated purely on how long it took for the results to show onto the screen once they were sent off to be analysed (may include a page refresh). The results could also prove in favour of Malcore due to queue priority ordering. The testing procedure is not necessarily consistent among platforms and thus also affects the time taken for each scan. The time results don’t necessarily show the accuracy of the results; a test that took 30 seconds could output a clean file and miss several IOC’s that a scan taking 5 minutes would identify.